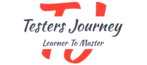

We’ll explore the 7 Principles of Software Testing in simple terms, complete with examples, clarifications, and bonus tips to enhance your understanding. Software testing is an essential part of ensuring the quality and reliability of any application. Whether you’re a beginner or an experienced tester, understanding the fundamental principles of software testing can help you improve your approach and create more effective testing strategies.

Table of Contents

1. Testing Shows the Presence of Defects

Clarification: The main goal of software testing is not to confirm that the software works flawlessly, but to identify and reveal any problems or defects in the system. Testing helps find flaws in the software under specific conditions or inputs. However, just because no defects are found during testing doesn’t mean the software is perfect. It simply means that no defects were detected during the tests that were conducted.

In other words, testing is a tool for discovering problems, but it can’t guarantee the software is completely defect-free unless every possible situation has been tested. And even then, there could still be defects lurking in areas that weren’t tested or situations that weren’t anticipated.

Detailed Example:

Let’s take a calculator app as an example.

- Scenario 1 (Basic Test Case): You open the calculator app and input “2 + 2.” The app correctly shows the result as 4.

- This is a success! The calculator worked fine for this basic test case, and everything seems perfect so far.

- But, does this mean the calculator app is completely flawless? Not necessarily.

- Scenario 2 (Testing Other Operations): Now, let’s try other operations:

- You input “5 – 3” and get 2.

- You input “6 * 2” and get 12.

- These results seem correct as well, but the app may still have issues that aren’t obvious from these simple operations.

- Scenario 3 (Edge Case Test): What happens when you input something like “5 / 0” (divide by zero)? Many calculators would crash or show an error message, because dividing by zero is undefined in mathematics. If your app doesn’t handle this edge case properly (for example, by showing a message like “Error: Division by zero”), then it contains a defect.Even though the calculator passed the basic test cases (like addition and subtraction), it still has a defect (failure to handle division by zero).

Key Point:

- Testing doesn’t guarantee perfection: The fact that the calculator worked correctly with basic operations (like 2 + 2 = 4) doesn’t mean it is flawless. More complex or edge cases (like dividing by zero) may expose defects that were not detected during testing.

- Undetected defects: Sometimes, defects may exist but aren’t found in the tests that were run. This is why it’s important to test in as many different situations as possible, including unusual or unexpected inputs, to uncover as many issues as you can.

In summary, software testing helps reveal defects, but it can’t guarantee that a system is defect-free unless it is thoroughly tested in all possible scenarios. Even after extensive testing, some defects might still exist in parts of the software that haven’t been tested or considered.

2. Exhaustive Testing is Impossible

Clarification: Exhaustive testing means testing every possible input, condition, and scenario in a system to make sure it works perfectly under all circumstances. In theory, this sounds like a good idea, but in practice, it’s impossible for most systems due to the time, resources, and effort it would require. The number of possible inputs and combinations grows very quickly, making it unfeasible to test everything.

The goal of testing isn’t to test every single possibility but to focus on testing the most important cases, such as typical inputs, boundary cases, and possible edge cases. This makes the testing process more practical and efficient, without compromising the quality of the tests.

Detailed Example:

Let’s say you’re testing a form with an input field for age. The field accepts ages from 18 to 60.

- Exhaustive Testing Approach:

If you were to perform exhaustive testing, you would need to test:- Every valid input from 18 to 60. This means testing for each and every age value in between, so from 18, 19, 20, all the way up to 60. This would be 43 test cases in total (60 – 18 + 1 = 43).

- You’d also have to test all invalid inputs, like ages less than 18 or greater than 60. For example, you’d test invalid values like 0, -1, 100, etc.

- What if the age field has a valid age (e.g., 25), but the email field is empty?

- What if the name field has a special character or an unusually long string?

Practical Approach:

Since exhaustive testing isn’t feasible, we focus on testing a smaller, more manageable set of cases. The key here is to focus on:

- Boundary Values:

- These are the edge values of a range. For example, if the age field accepts ages between 18 and 60, the boundary values would be 18 and 60. These are critical because they are often where errors or defects are likely to occur (e.g., an off-by-one error).

- Test case examples:

- 18 (lower boundary)

- 60 (upper boundary)

- Invalid Inputs:

- Test for values that should be rejected by the system. These are inputs outside the valid range or inputs that don’t make sense.

- Test case examples:

- -1 (an invalid, negative age)

- 0 (an age less than the minimum allowed)

- 100 (an age above the maximum allowed)

- Random Valid Values:

- It’s also a good idea to test a few random valid values within the accepted range. These are typical cases that a user might enter during normal use of the system.

- Test case examples:

- 25 (a valid age in the middle of the range)

- 45 (another valid age)

By focusing on these key test cases, you can cover a broad range of scenarios without testing every possible input. This makes testing manageable and still effective at finding defects.

Why Exhaustive Testing is Impractical:

- Exponential Growth of Test Cases: As mentioned earlier, adding more fields or more conditions increases the number of test cases exponentially. For example, if you have 2 fields with 3 possible inputs each, you have 9 test cases. If you add just one more field, the number of test cases becomes 27. The more fields you add, the more combinations you must test, which quickly becomes unmanageable.

- Time and Resource Constraints: Testing everything would take too long and require too many resources. It would not be cost-effective or realistic, especially with large and complex systems.

- Focus on Critical Areas: Instead of testing everything, it’s better to focus on testing the most important areas (boundary cases, invalid inputs, and typical scenarios) to identify the most common and impactful defects.

Summary:

Exhaustive testing, where every possible input and condition is tested, is impossible for most systems due to the sheer number of test cases. Instead, a more practical approach is to focus on key test cases, such as:

- Boundary values (e.g., 18 and 60 for the age field),

- Invalid inputs (e.g., -1 and 100),

- Random valid inputs (e.g., 25 and 45).

This approach helps ensure that the most important and likely defects are found without overwhelming the testing process.

3. Early Testing Saves Time and Costs

Clarification: The earlier you find a defect in the software development process, the cheaper and faster it is to fix. The cost and effort required to fix issues increase as the project moves through different phases—such as design, development, and testing.

For example, if a defect is discovered during the design phase (when the product is just being planned), it’s usually much easier and less expensive to fix than if it’s found after the product has already been developed or released. Identifying defects early in the process helps you avoid spending time and resources on reworking parts of the system later, making it more efficient and cost-effective.

Detailed Example:

Imagine you’re building a file upload feature for a web application. The requirement states that users should be able to upload files up to 10MB in size. However, the design and later development specify the limit as 5MB.

- Early Detection in the Requirements Phase:

If the issue is identified early in the requirements phase (before any code is written), you can quickly correct the discrepancy by updating the requirement to match the design or by adjusting the design to match the original requirement. This means no code has been written yet, so the fix only requires a small change in documentation or planning.- Impact of fixing early: The cost to fix this problem would be minimal—just a quick update to documents and requirements.

- Later Detection in the Development or Testing Phase:

Now, suppose the design and development proceed without noticing the mismatch, and the application is built to accept only 5MB files. If you discover the problem during the testing phase, it will likely require a larger change. You might have to go back and update the code, redo parts of the implementation, and retest everything.- Impact of fixing later: This fix will be much more costly—it could take days or even weeks to fix the code, retest, and ensure everything works as expected. Additionally, developers might have already worked on other features, which means fixing the issue would disrupt their current work.

- After Release:

If this issue is found after the software has been released to users, the cost and impact are even higher. You would need to fix the bug, release an update, and potentially deal with customer complaints or lost trust. This often leads to higher support costs, reputation damage, and customer dissatisfaction.

Key Point:

- Finding defects early (during planning, requirements, or design) is much cheaper and faster compared to finding them later (during development or after release).

- Early testing helps identify potential problems before they become bigger issues that can delay the project or increase costs.

Practical Tip:

Start testing activities as early as possible in the Software Development Life Cycle (SDLC) to avoid costly mistakes later. Here are a few ways to begin testing early:

- Review Requirements and Specifications:

- Early review of the project requirements and specifications helps ensure that what is being built matches what was intended. This allows you to spot discrepancies or potential issues in the requirements themselves, before any coding begins.

- Example: Before starting any design or coding, check if the requirement “users can upload files up to 10MB” matches with any technical limitations or system capacities. If there’s a conflict, you can solve it right away.

- Test Prototypes or Mockups:

- If there’s a prototype or a mockup of the system, start testing it for usability, flow, and clarity even before the development begins. You can identify issues in the design, user interface, or user experience (UI/UX) early, which will be easier to fix than after the system is fully built.

- Example: If you have a clickable prototype of the upload feature, try out the file upload process early to ensure it meets user expectations. If users find it difficult or confusing, you can adjust the design before investing time in building the full feature.

- Test with Stakeholders:

- Early involvement of stakeholders (such as product owners, users, or customers) in the review process helps catch misunderstandings or miscommunications in the requirements. Getting their feedback early can help clarify any ambiguous features and adjust the course of development as needed.

Why Early Testing is So Important:

- Reduced Rework: When you find defects early, you avoid having to rework large parts of the system. This means less time and fewer resources spent fixing issues.

- Lower Costs: The longer you wait to fix a defect, the more expensive it becomes. Early testing keeps the cost of fixing problems low and manageable.

- Faster Time to Market: Fixing defects early also means the development process moves forward more smoothly. If defects are caught later in the cycle, they can delay the project as developers need to revisit and modify existing code.

- Improved Quality: Early testing helps ensure that the product is built correctly from the start, which leads to a higher-quality product that better meets user needs.

Summary:

Early testing saves both time and money. Detecting and fixing defects early in the development cycle is far cheaper and easier than waiting until later stages (like during development or after release). You can start testing activities such as reviewing requirements, testing prototypes, and getting early feedback from stakeholders to catch issues before they snowball into expensive problems. By addressing issues early, you ensure the software is built right the first time, reducing rework and making the overall development process smoother and faster.

4. Defect Clustering

Clarification: Defect clustering refers to the phenomenon where a small number of modules or features in a software system contain the majority of defects. This is often based on the Pareto Principle (also called the 80/20 rule), which states that 80% of the problems come from 20% of the areas. In software testing, this means that instead of defects being spread out evenly across the entire system, most of the defects tend to accumulate in a few areas—usually those that are more complex or have been altered the most.

By recognizing and focusing on these areas that are likely to have the most defects, you can optimize your testing efforts to find more issues in less time. This allows testers to be more efficient and effective in their work.

Detailed Example:

Imagine you are testing a content management system (CMS). A CMS typically has various features, such as:

- User authentication (login, registration)

- Content creation (writing and publishing articles)

- Media upload (images, videos, etc.)

- Database management (storing and retrieving content)

Let’s say, based on your past experience or analysis of the application, you know that the media upload feature is prone to having more defects. Why?

- Complexity: The media upload feature likely involves multiple systems working together—user input validation, file type checks, file size restrictions, storage management, and so on. More components mean more potential for things to go wrong.

- Frequent changes: If the media upload feature has undergone several changes during development (for example, adding new file formats or improving the UI), this increases the chances of introducing bugs.

Now, suppose that when you test the system, you find that most of the defects (such as errors when uploading files, issues with specific file types, or problems with the storage system) are concentrated in the media upload module.

- Why this happens: The complexity of the feature means it has many interactions with other parts of the system, making it more likely to contain defects. This is a classic case of defect clustering—most defects are located in just one area of the application (the media upload feature), even though the system has many other features.

- Prioritization: Given that this module is responsible for most of the defects, it makes sense to prioritize it for intensive testing. This could mean testing the media upload feature more thoroughly, running more test cases, and simulating a variety of edge cases to ensure it works perfectly. By focusing your testing efforts on this high-risk area, you can catch a large number of defects that might otherwise have gone unnoticed.

Practical Tip:

To make testing more efficient, it’s important to identify high-risk areas that are more likely to have defects. This is where the concept of defect clustering becomes useful.

- Use Historical Data:

- Review past releases or versions of the application to see which modules or features had the most bugs in the past. For example, if the media upload feature has always had defects in previous versions of the CMS, there’s a good chance it will have defects again.

- Example: Look at the bug reports and test results from previous projects or versions of the application. If there were consistently issues in the user authentication module (such as login failures or password reset problems), that might be a high-risk area to focus on in future testing.

- Consult Developer Insights:

- Developers often have a good understanding of which parts of the code are more complex or have been changed the most. They can provide insights into areas that might be prone to defects.

- Example: Developers might tell you that the file upload module was recently refactored to support new file formats, making it more likely to have bugs related to new changes.

- Allocate More Testing Effort:

- Once high-risk areas are identified, allocate more time and resources to test those specific modules. This might involve running additional test cases, using automated testing for quicker feedback, or doing exploratory testing to find bugs that were missed in scripted tests.

- Focus on High-Impact Features:

- Focus your testing efforts on the areas that are most important to the end users. For example, in an online shopping app, you would want to focus testing on the checkout and payment processing features, since bugs in those areas would have the highest impact on the user experience.

Why Defect Clustering Happens:

- Complexity: Some modules are simply more complex than others, requiring more lines of code, multiple systems to interact, or difficult algorithms. More complexity = more potential for mistakes.

- Frequent Changes: Parts of the system that are frequently updated or modified (like the media upload feature in the example) are more likely to introduce new bugs because they are in active development.

- High Visibility: Some parts of the system (like login or checkout features) are used more often by users, which means they are more likely to have bugs exposed, especially when tested in real-world conditions.

Summary:

Defect clustering refers to the tendency of software bugs to concentrate in a small number of modules or features, rather than being spread out evenly across the entire system. This is often in line with the Pareto Principle (80/20 rule), where 80% of the defects are found in 20% of the application. By identifying these high-risk areas early—through historical data or developer insights—you can prioritize testing efforts and catch more defects in less time. Focus your testing on these areas, such as complex or frequently changed modules, to ensure better test coverage and higher defect detection rates.

5. Pesticide Paradox

Clarification: The Pesticide Paradox is a concept in software testing that says repeatedly running the same set of test cases over and over again will eventually stop finding new defects. This is similar to how pests can become resistant to the same pesticide if it’s used repeatedly without any changes. In software testing, if you continue testing the same areas in the same way, you may stop uncovering new issues, and the software may appear to be “clean” even though it’s not.

To avoid this, testing needs to be constantly refreshed. New test cases, different testing methods, or trying out new environments and scenarios can help you find defects that might have been missed in previous tests.

Detailed Example:

Let’s say you’re testing a login feature of a website.

- Initially, you create a set of test cases to check whether the login works as expected. These tests include:

- Correct username and password.

- Incorrect username and correct password.

- Correct username and incorrect password.

- Empty username or password fields.

- This situation is the Pesticide Paradox. You keep running the same tests, but because they haven’t changed, they are no longer helpful in finding new issues. The software may seem like it’s “clean,” but that doesn’t necessarily mean it is.

New Test Cases and Scenarios:

To break the Pesticide Paradox, you need to refresh your testing approach by adding new test cases or testing in different environments. For example:

- Test Case 1: Different Browsers

What if the user is using a different browser, like Firefox or Safari? Some browsers may behave differently, especially with features like autofill, cookies, or session management.- You might find that the login works fine in Chrome but fails in Firefox due to a session handling issue.

- Test Case 2: Different Device Types

What if a user logs in from a mobile device or a tablet instead of a desktop? There may be issues with the responsive design or the way the app handles smaller screens.- You could discover that on mobile devices, the login button is hard to click due to poor placement or small font size.

- Test Case 3: Different Network Conditions

What happens if the internet connection is slow, or the user is on a poor network? The login process may fail to complete or time out.- Running tests under conditions of low bandwidth or high latency might reveal bugs related to session timeouts or error handling.

By adding new test cases and testing in new contexts (like different browsers, devices, or network conditions), you’re introducing new perspectives that may uncover defects that the original set of tests didn’t. This helps break the Pesticide Paradox by constantly refreshing your testing approach and finding new defects that may otherwise go unnoticed.

Practical Tip:

To make sure that your testing remains effective and uncovers new defects, you should regularly review and revise your test cases. You can combine automated testing (which tests frequently used features) with exploratory testing (where testers use their creativity to test new scenarios or areas that automated tests might miss).

Here’s how you can do this:

- Regularly Review Test Cases:

- As the software evolves, so should the test cases. For example, if new features are added, you need to write new tests. If old tests have already passed multiple times without finding defects, consider updating them or replacing them with new scenarios.

- Example: If the login page now supports social media logins (e.g., Facebook, Google), you’ll need to create new tests to verify those integrations, in addition to the existing username/password tests.

- Combine Automated and Exploratory Testing:

- Automated testing is great for running repetitive tests on features that don’t change often, such as logging in or form submission. But automated tests will only catch defects that were already anticipated in the test cases.

- Exploratory testing is when testers take a more creative approach and test areas that might not be covered by automation. Testers explore the application from the perspective of different users, trying to break the system and uncover defects in unexpected areas. This can be especially useful for discovering edge cases.

- Expand Testing into New Areas:

- Once you feel confident that your test cases are covering the main functionality, start expanding to new areas. This might include:

- Testing different user roles (e.g., admin vs. regular user).

- Testing with different languages or locales to ensure global compatibility.

- Testing on different operating systems or in virtual environments.

- Once you feel confident that your test cases are covering the main functionality, start expanding to new areas. This might include:

Why the Pesticide Paradox Happens:

- Test Case Repetition: Over time, as the same tests are executed, they stop finding new defects because the software is already “optimized” to pass those tests.

- Lack of Variation: If you always test the same things in the same way, you will miss any defects that only appear under different conditions or scenarios. Software is complex and behaves differently based on context, and without variation, your tests will no longer be effective.

Summary:

The Pesticide Paradox highlights that repeatedly running the same tests can eventually become ineffective at finding new defects. Just like pests developing resistance to the same pesticide, software can appear “clean” if testing methods aren’t refreshed. To avoid this, regularly update and refresh your test cases by introducing new test scenarios, such as testing different browsers, devices, or network conditions. Combining automated testing with exploratory testing allows you to ensure comprehensive test coverage and continuously uncover new defects.

6. Testing is Context-Dependent

Clarification: The type of testing you perform should match the nature, purpose, and audience of the application you’re testing. Different types of software require different approaches to testing, depending on what they’re meant to do and who will be using them. What works for one type of application might not work for another, even if they are both digital products.

Detailed Example:

Let’s break it down with three very different types of applications:

- Banking Application

- What’s Important:

- Security: Since users are dealing with sensitive financial data, security is a top priority. Any vulnerabilities can lead to data breaches, identity theft, or loss of money.

- Accuracy: Financial calculations must be correct at all times, especially when transferring money or calculating interest rates. Errors can result in significant financial loss.

- Performance: The application should respond quickly and handle a large number of transactions simultaneously without crashing. Downtime or slow responses can frustrate users and potentially affect their finances.

- Testing Focus:

- Security Testing: Check for encryption, safe login procedures (like two-factor authentication), and any weaknesses in the system that could be exploited by hackers.

- Load Testing: Simulate many users accessing the app at once to ensure it can handle peak traffic without slowing down or crashing.

- Functional Testing: Test all functions of the app like money transfers, bill payments, and transaction history to make sure everything works perfectly.

- Example: Imagine you’re testing a mobile banking app, and you need to check if the transfer of funds between accounts happens accurately. If there’s a bug that makes the transfer amount slightly incorrect, the result could lead to customers losing trust in the app, or even worse, financial discrepancies.

- What’s Important:

- Social Media App

- What’s Important:

- User Experience: Social media apps must be user-friendly. People should easily understand how to post, share, like, or comment without frustration.

- Responsiveness: The app should adjust well across different devices—smartphones, tablets, and desktops—and work smoothly on both iOS and Android platforms.

- Cross-Platform Compatibility: The app needs to perform equally well on different devices and browsers to ensure no user feels left out.

- Testing Focus:

- Usability Testing: Observe how real users interact with the app to identify pain points (like confusing buttons or difficult navigation) and make the interface more intuitive.

- Cross-Browser Testing: Make sure the app looks and works well on all major browsers (Chrome, Firefox, Safari) and operating systems.

- Performance Testing: Check that images, videos, and posts load quickly without any delay, even with many users browsing at the same time.

- Example: Imagine you’re testing a feature where users upload photos. If the app is too slow in loading or cropping images, users might get frustrated and abandon the app. Testing how quickly and efficiently the app handles such tasks is crucial for user satisfaction.

- What’s Important:

- Game Application

- What’s Important:

- Graphics: Games often rely on high-quality graphics to immerse users. Poor graphics can make the game feel unprofessional or less enjoyable.

- Latency: In multiplayer games, lag (or latency) can ruin the experience. Players need to have real-time interaction with minimal delay.

- Immersive Experience: Games must be engaging. This means testing gameplay mechanics, sound design, and overall flow to ensure that the game keeps the player interested.

- Testing Focus:

- Graphics and Visual Testing: Ensure the game renders smoothly at high frame rates, and that the visuals are consistent across different platforms.

- Latency Testing: For online or multiplayer games, check how quickly actions are reflected in the game and whether there’s a noticeable delay during interactions.

- Game Functionality Testing: Test for bugs that may crash the game, break levels, or disrupt gameplay.

- Example: In a racing game, testing might focus on ensuring that car movements and background transitions are smooth. A stuttering or choppy visual experience can make the game feel less enjoyable, which could cause players to stop playing.

- What’s Important:

Practical Tip: Tailor Your Testing Strategy

- What to Do:

When planning your testing strategy, always consider the specific requirements, risks, and goals of the application you’re testing. A banking app requires rigorous security tests and performance checks, while a social media app focuses more on usability and responsiveness. A game needs to emphasize fluid graphics and a fun user experience. - Why It Matters:

Applying the right testing method for the type of application ensures that you catch the issues that really matter to users and that the software functions as intended. Testing with the context of the application in mind helps prevent wasting time on unnecessary tests or overlooking critical aspects. - Example:

If you apply the same testing approach to a banking app that you would for a game, you might focus too much on graphics or player experience and overlook critical areas like data security and transaction accuracy. On the other hand, if you test a game like a business application, you might miss out on ensuring it has fun mechanics and smooth performance during play.

Summary:

Each application has different testing needs based on its context—what works for one might not work for another. To be effective, your testing strategy should be adapted to the specific purpose, audience, and risk factors of the software you’re testing.

7. Absence of Error – Fallacy

Clarification:

Just because an application doesn’t have any obvious bugs or technical errors doesn’t mean it’s perfect or ready to be used. Software can still fail if it doesn’t meet users’ expectations or the goals it was designed to achieve. This is called the “absence of error fallacy”—the mistaken belief that if an app is technically correct, it’s fully functional. Effective testing should check not only that the software works correctly from a technical perspective, but also that it is fit for its intended purpose and meets the needs of its users.

Detailed Example:

Let’s take the example of a weather app:

- Technical Accuracy:

The app might display the temperature and weather conditions correctly. It could show the current temperature as 75°F, with accurate data on wind speed, humidity, and forecasted weather for the day. From a purely technical perspective, everything is working fine. - Fit for Purpose:

However, if the app fails to include location-based settings (meaning it doesn’t automatically show weather based on the user’s current location), it would be less useful. Users would have to manually enter their city or zip code every time they open the app. This is a major usability issue, even though the app is technically error-free.Why it’s a problem:- Imagine you’re out and about and want to quickly check the weather near you. If the app doesn’t automatically detect your location or allow you to set it easily, it forces the user to take extra steps. This could lead to frustration and might cause the user to stop using the app altogether.

- The app is accurate (temperature readings are correct), but it’s not meeting the primary need of users, which is convenience—they want to know the weather where they are, without having to search for it.

Key Takeaway:

Effective testing goes beyond just checking for bugs. It must also ensure that the software aligns with the goals it is supposed to achieve and meets user expectations. In other words, software may be “error-free” technically, but if it doesn’t serve its purpose well, it might still fail. Testing should verify:

- Technical correctness: Does the app work as intended without crashing or malfunctioning?

- User needs: Does the app actually solve the problem it was designed to solve in a way that users expect and find useful?

Example:

Consider an e-commerce website. Technically, it could be error-free—products are listed, payments are processed correctly, and there are no crashes. But if the website has a confusing checkout process (perhaps with too many steps or unclear instructions), users might abandon their cart. Even though there are no bugs, the user experience is poor, and the site isn’t meeting its main business goal—selling products efficiently.

In summary, absence of error fallacy highlights the importance of testing beyond the absence of bugs. The software needs to work well not only technically but also in a way that delivers value to the user and achieves the business objectives.

Conclusion

The 7 Principles of Software Testing provide a strong foundation for designing effective and efficient testing strategies. These principles guide testers in ensuring that software is not only functional but also reliable, user-friendly, and meets both technical and business requirements.

By applying these principles, testers can:

- Optimize Testing Efforts

Testers can focus on the most critical areas of the software, ensuring that resources are used effectively and that time isn’t wasted testing parts of the system that are already well understood or less risky. - Increase Defect Detection

Following the principles helps testers find defects that might otherwise be missed. By thinking about things like the software’s purpose, user needs, and potential risks, testers are more likely to uncover hidden issues early in the development cycle. - Ensure the Software Meets Both Technical and Business Requirements

Effective testing isn’t just about ensuring the code runs correctly, but also about ensuring the software aligns with the business goals and the needs of the users. This means that testing should also verify that the software meets user expectations and solves the problem it was designed for.

Bonus Tips: Myths and Unique Insights

Here, we’ll break down some common myths about software testing and provide unique insights that can help you improve your testing approach. These tips are designed to give you a more well-rounded understanding of software testing and help you make better decisions as a tester or team member involved in quality assurance.

Myth 1: Testing Can Make Software 100% Bug-Free

Clarification:

No matter how much effort you put into testing, it’s impossible to guarantee that software will be completely free of defects. Software is often complex, and there are many variables—different devices, operating systems, networks, user behaviors, etc.—that can introduce unforeseen issues. Testing helps minimize risks, but it doesn’t eliminate them entirely.

Detailed Example:

Imagine you’re testing an e-commerce website. You run hundreds of tests on different browsers, devices, and operating systems, checking for bugs and defects. You find and fix several bugs—like incorrect display on mobile devices or errors when users add products to their cart. However, you can’t test every single possible combination of user actions, browser settings, or network conditions. There may still be edge cases that weren’t covered in your testing, such as a user on an outdated browser with slow internet experiencing issues.

The key point here is that testing doesn’t guarantee perfection. It’s about reducing the likelihood of defects, but there will always be unpredictable scenarios where bugs could appear later.

Myth 2: Testing is Only About Finding Bugs

Clarification:

While finding bugs is one of the main tasks of testing, it’s not the only purpose. Testing is also about improving the overall quality of the software. It helps to validate requirements, ensuring the system meets the user’s expectations and business goals. The goal of testing is to ensure that the system works well, is usable, and solves the problem it was created for—not just to find errors.

Detailed Example:

Let’s say you’re testing a project management app. You might find a bug where users can’t create tasks in one of the app’s modules. But beyond just finding this bug, testing also involves looking at user experience: Is the task creation process intuitive? Are the labels clear? Does the feature match the initial business requirements, like allowing users to set deadlines or assign tasks to team members?

Testing also includes ensuring that the app works as intended and solves the user’s problems, like helping them manage tasks more efficiently. It’s about ensuring quality—both from a technical standpoint (bug-free) and a user experience standpoint (useful and intuitive).

Insight 1: Test Automation is Not a Silver Bullet

Clarification:

While test automation can speed up certain types of testing—especially regression testing (testing whether changes in the software break existing features)—it is not a replacement for manual testing. Automation helps with repetitive tasks and can run tests quickly, but there are limitations. For example, automation can’t handle exploratory testing, where testers use creativity and intuition to find hidden issues. Some bugs are only found through human observation and thinking outside the box.

Detailed Example:

Imagine you have an online banking app. You set up automated tests to check that the core functions—like logging in, checking balances, and making transfers—work across different devices and browsers. These automated tests will run faster than manual tests and catch obvious bugs. However, if a new feature is introduced, like setting up personalized spending alerts, automation wouldn’t be able to check whether the feature is intuitive or if users understand how to set it up correctly. This requires manual testing, including exploratory testing to simulate real-world user behavior and find subtle problems.

Bottom line: Automation is a powerful tool, but it cannot fully replace the need for manual testing, especially for creative and exploratory testing tasks.

Insight 2: Collaboration Enhances Testing

Clarification:

Collaboration between different roles—developers, business analysts, and end-users—can significantly improve the effectiveness of your testing process. When you involve people from different areas of expertise, you get diverse perspectives that help you identify potential issues and gaps in your testing strategy. This leads to better test coverage and ultimately more thorough testing.

Detailed Example:

Consider a customer relationship management (CRM) system being developed for a large company. The developers know the technical details of how the system is built, but business analysts understand the specific needs of the company and the workflows the system should support. Meanwhile, the end-users—the sales team who will use the CRM every day—can provide feedback on whether the system is actually easy to use and meets their needs.

By involving all of these groups, you can ensure that testing covers technical accuracy (does the CRM work without crashes?), business goals (does it streamline sales processes?), and user experience (is it easy to use for salespeople?). This collaborative approach ensures that all aspects of the software are tested effectively and that potential issues are caught early.

Pro Tip: Prioritize Testing Based on Risk

Clarification:

Not all parts of the software are equally important. Some features are critical to the business or to the user experience, while others are less impactful. When testing, it’s important to prioritize areas that present the highest risk—like core features or areas that have the potential for the most serious impact if something goes wrong.

Detailed Example:

Imagine you’re testing an online ticket booking system. The payment system and ticket confirmation are the most critical features because they directly impact whether the user can complete their transaction successfully. If the payment process is buggy or users can’t receive their ticket confirmation, the business could lose customers and revenue.

On the other hand, if the color scheme on the confirmation page is off, that’s a lower priority. While it might be annoying to the user, it doesn’t prevent them from completing the transaction. Therefore, you would prioritize testing the payment process and confirmation system first and focus less on cosmetic issues.

This approach ensures that you focus your efforts where they’ll have the most impact on the user experience and business success.

Summary:

- Testing can’t guarantee 100% bug-free software, but it helps minimize risks.

- Testing is not only about finding bugs—it’s about improving overall software quality and ensuring it meets business and user requirements.

- Test automation is valuable for repetitive tasks but can’t replace manual testing for exploratory or creative tasks.

- Collaboration between developers, business analysts, and end-users leads to better testing and coverage.

- Prioritize testing based on risk—focus on critical features that impact user experience and business goals.

By understanding and applying these myths and insights, testers can approach their work with a clearer, more practical mindset, leading to more effective and meaningful testing.

Stay Connected & Keep Learning!

Don’t miss out on more amazing articles and expert insights here at Testers Journey Blogs! Be sure to explore our comprehensive Testers Journey Tutorials for hands-on tips and tricks to level up your testing skills.

Got any questions or brilliant ideas for future topics? Drop a comment below—we’d love to hear from you!

For more Exclusive tutorials, insider insights, and the latest updates, follow us on our Blogs, YouTube Channel, and Facebook Page! Join the community, stay informed, and let’s continue this journey together. Happy testing and coding! 🚀

Frequently Asked Questions

1. What are the 7 Principles of Software Testing?

The 7 principles of software testing are:

Testing shows the presence of defects – Testing can prove that defects exist but not that a product is defect-free.

Exhaustive testing is impossible – It’s not feasible to test all possible scenarios; testers must prioritize important test cases.

Early testing – Testing should begin as early as possible in the development lifecycle.

Defect clustering – Most defects are often found in a few modules of the application.

Pesticide paradox – Repeating the same test cases will eventually stop finding new defects.

Testing is context dependent – Testing strategies vary based on the type of software and its intended use.

Absence-of-errors fallacy – A defect-free product does not guarantee that it meets user requirements.

2. Why is it said that testing can only show the presence of defects, not their absence?

No matter how much testing is done, there is always a possibility that some defects remain. Testing can confirm that a system has bugs, but it cannot guarantee that all bugs have been found or that the software is completely error-free.

3. Why is exhaustive testing not possible?

Exhaustive testing, where every possible input combination and scenario is tested, is impractical due to time, cost, and resource constraints. Instead, testers prioritize test cases based on risk, importance, and likelihood of failure.

4. What is the ‘Pesticide Paradox’ in software testing?

The Pesticide Paradox refers to the idea that if the same test cases are used repeatedly, they will no longer find new defects. To overcome this, testers need to review and update test cases periodically to ensure they remain effective.

5. What is the ‘Absence-of-Errors Fallacy’ in software testing?

The Absence-of-Errors Fallacy highlights that even if a software product has no identified defects, it doesn’t guarantee that it meets user needs or is fit for purpose. Ensuring the software aligns with user requirements is essential.

6. How can testers handle the challenge of non-exhaustive testing?

Testers can manage non-exhaustive testing by using risk-based testing, focusing on critical functionalities, and applying test design techniques like boundary value analysis, equivalence partitioning, and prioritizing test cases.

7. How does the principle ‘Testing is context dependent’ influence testing?

This principle means that the testing strategy should vary based on the type of software, industry standards, and user requirements. For example, testing a banking application requires a different approach compared to testing a mobile game.

8. Why is defect clustering an important principle in testing?

Defect clustering helps testers prioritize their efforts by identifying the areas of the application that are more likely to have defects. This improves testing efficiency and ensures that critical issues are detected early.

9. What is regression testing, and how is it related to the pesticide paradox?

Regression Testing ensures that new code changes do not negatively impact existing functionalities. The pesticide paradox highlights the need to update regression tests regularly to keep them effective in finding new defects.

10. Why is risk-based testing important?

Risk-based testing focuses on identifying and prioritizing testing efforts in areas with the highest risk. This ensures that critical functionalities are tested thoroughly, even if exhaustive testing is not possible

11. Why is user feedback important in the testing process?

User feedback helps identify issues that may not have been caught during formal testing. It provides real-world insights into the software’s usability, functionality, and performance in different environments.

Thanks Sourabh for this detailed information to clear the concept of software testing principles.